Last week I was scrolling TikTok, as one does, and saw this video by Sangeetha Bhatath, a software engineer. She was discussing that Andrej Karpathy had released the code for microGPT, an extremely simple version of the code used to train large language models. Karpathy is a co-founder of OpenAI and one of the leading thinkers in the space.

Sangeetha’s point is that you can try training a LLM yourself, and see what’s in the inside the black box to some degree. I was intrigued and decided to give it a try.

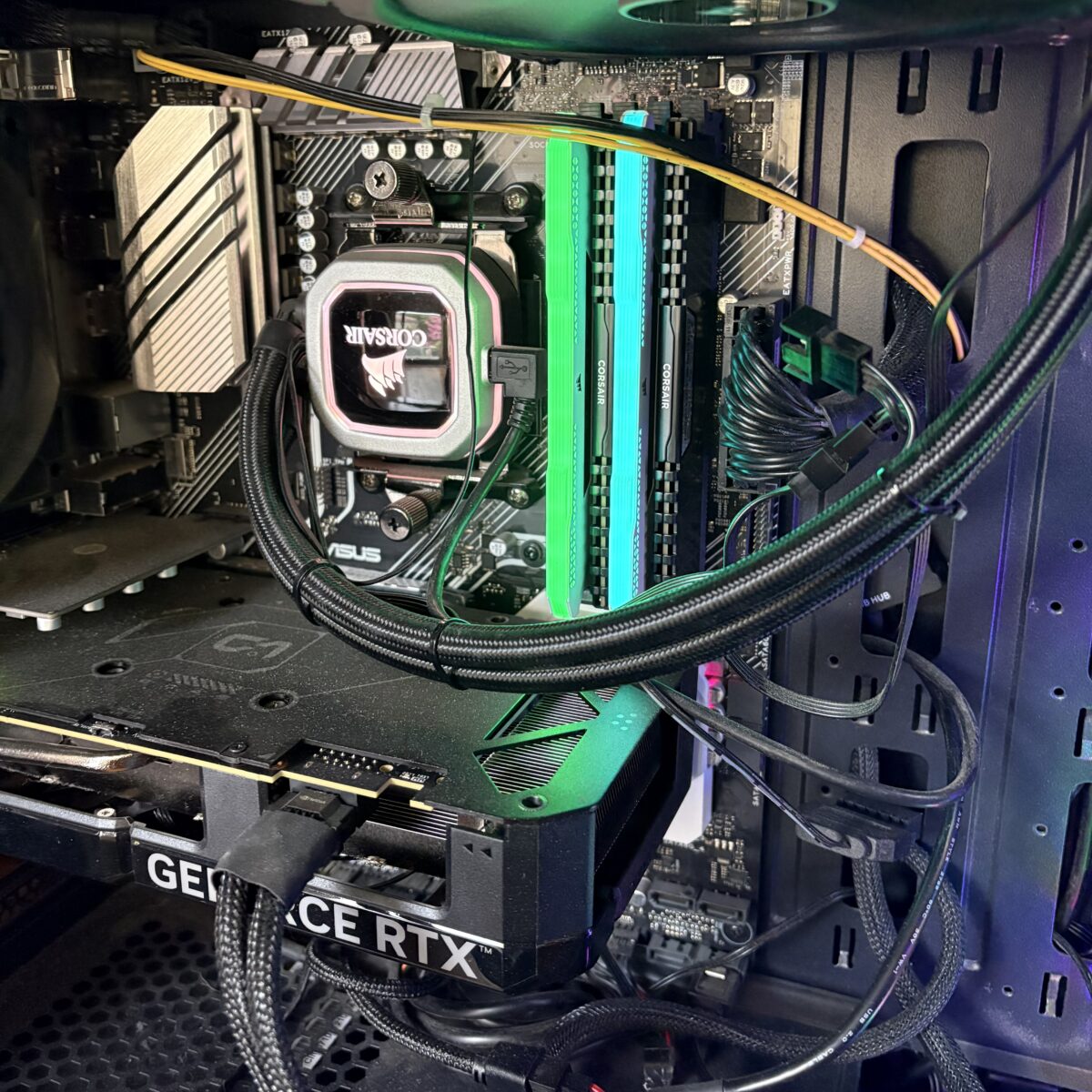

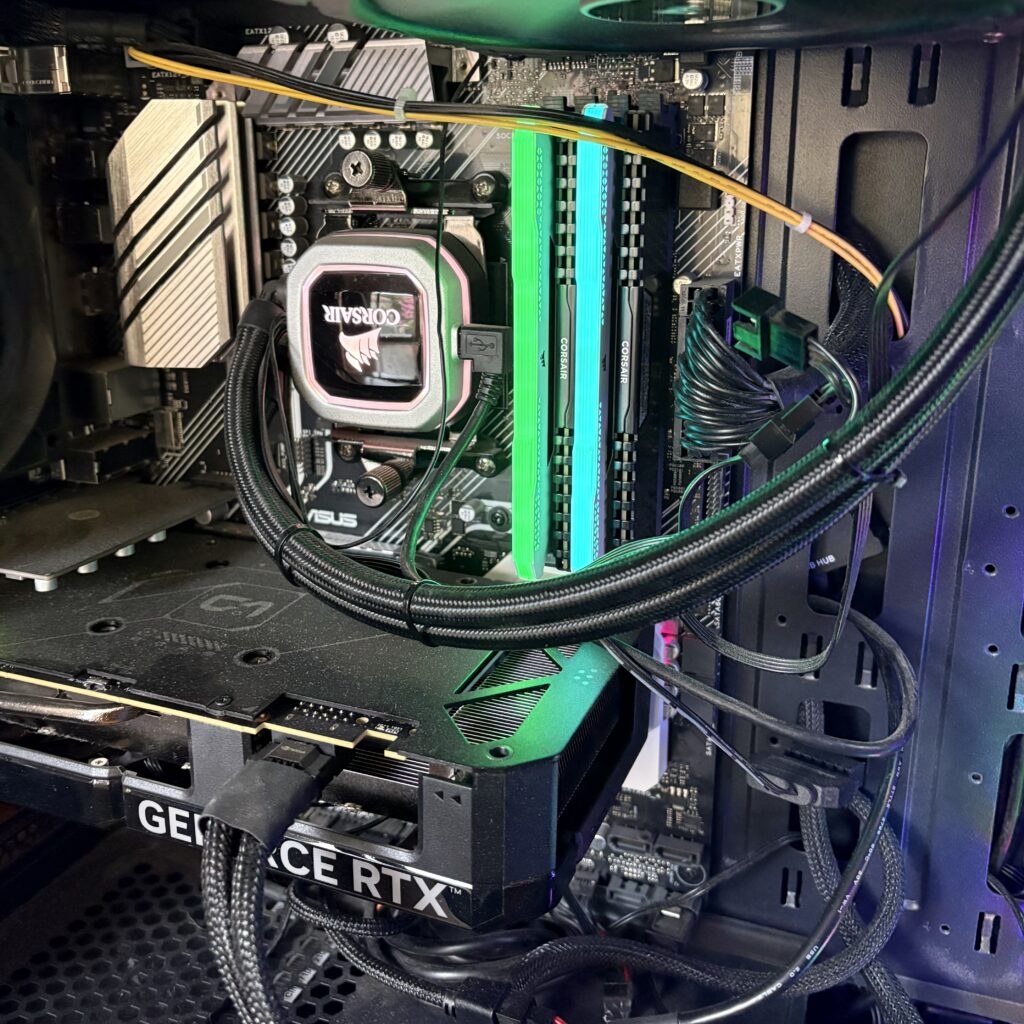

After a bit of chatting with Claude (the web chat AI from Anthropic), we agreed to use nanoGPT as it was able to take advantage of GPU processing. As a PC gamer, I have a reasonable video card (Nvidia 4070 Super w/12GB VRAM) that would greatly speed the training. GPUs do a lot of vector math to make video games work and coincidentally LLM training is basically the same kind of vector math. I hated linear algebra in engineering school, so I’m glad we have chips to do this for me.

The plan was to use the GPT-2 weights that are publicly available with as much data as I could gather of my own writing and speaking. In short, a plan to make a Cruftbot or CruftGPT. Claude made a detailed four phase plan that I could understand and was clear direction for Claude Code (Anthropic’s focused developer AI app) to execute.

The text you used to train a LLM is reflected in the way the LLM writes. Train a lot of Shakespeare, you get a LLM that talks like an Elizabethan. Train a lot of legal documents, you get a LLM that talks like a lawyer.

I’ve been in the interwebs for a long time and have 25 years of posting and over 300 videos of my various antics. Claude helped me write several scripts to scrape data from my weblog, Medium stories, Bluesky posts, and transcripts of my videos. Reddit has an export function, which made that easy. I have a lot of posts on Twitter, but I haven’t been posting there for a couple years now. It used to be easy to get an export of posts, but under the current management it’s extremely difficult.

I set Claude Code to work on setting up the NanoGPT code on my desktop. As an aside, wsl2 (Ubuntu linux) under Windows works very well. I fed the personal data to Claude Code and it formatted it for me. 25+ years on the internet equaling 699K tokens of data. Good, but not great.

Another aside: LLMs process text using tokens, which are the numerical building blocks of text input. Instead of reading full words, a tokenizer breaks text down into common chunks of characters. For example, the word ‘apple’ might be one token, while a complex word like ‘bioluminescence’ might be split into three or four tokens. The tokenizer assigns each unique chunk a specific number, the word ‘apple’ might be ‘27149’.

Training is essentially the LLM learning the mathematical relationships between these numbers. Since computers excel at math but don’t ‘read’ like humans, turning language into a giant game of statistics and geometry (technically it’s vector math) is what makes the magic happen.

Claude started a few training runs and tried both GPT-2 small (124M) and GPT-2 medium (345M) parameter sets to see what worked best with my personal dataset. After a bit of GPU time, it found the GPT-2 medium worked best to provide the best ‘val loss trajectory’. I learned that ‘val loss trajectory’ is tracking the validation loss number, which kinda means how well the personal data is overlaying with the base language data.

Since I want CruftBot to sound like me, it’s important the training results in my personal data being more apparent than the base language that the GPT-2 set provides.

Before bed, I told Claude to continue training and to continue without asking me for approval. The GPU was pegged at 99% but not overheating, which was great.

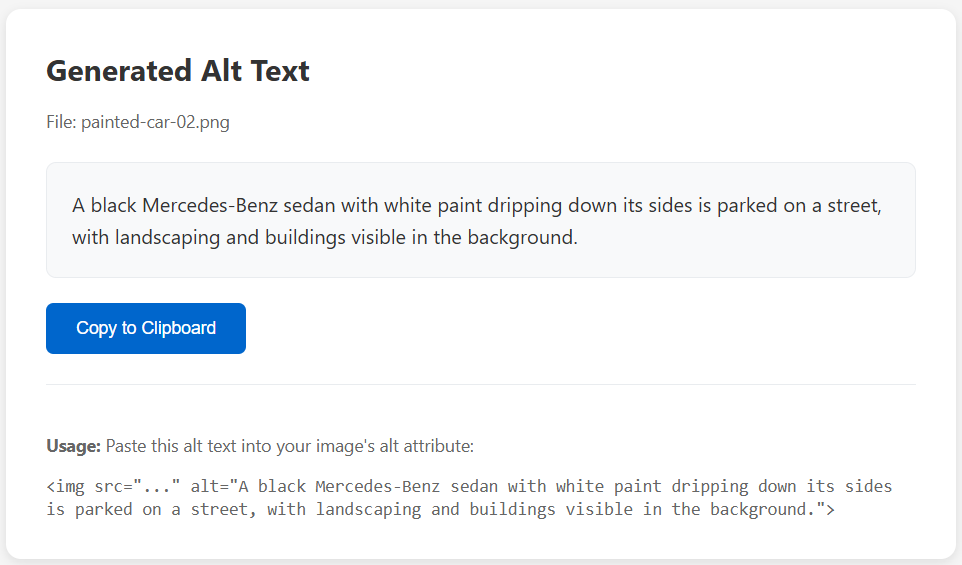

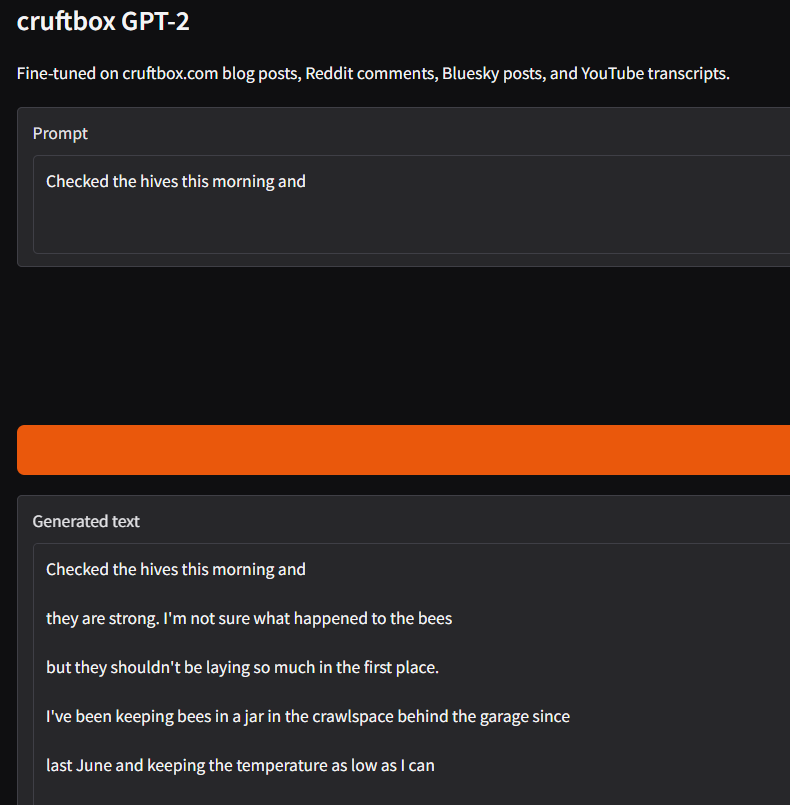

The next morning the training was done and Claude stood up Gradio to act as a UI with CruftBot.

The results were underwhelming.

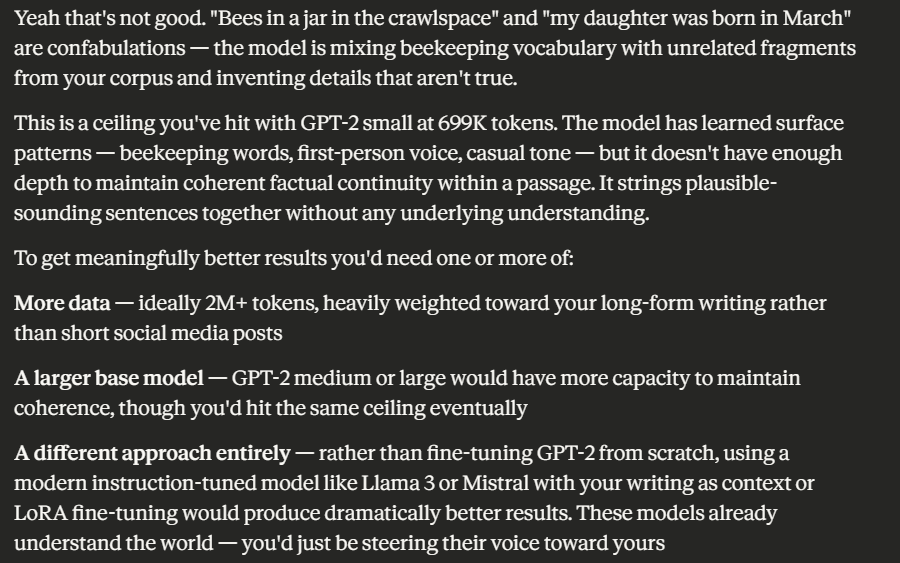

The output used words I use, but was put together in nonsense fashion. You could see CruftBot trying, but it was just guessing at words.

Claude explained “This is the fundamental limitation of a fine-tuned model this size: it’s not a knowledge model or a chat assistant, it’s a text completion engine trained on your writing patterns. It doesn’t understand questions, it just continues text in a direction that statistically resembles your corpus.”

Claude went on to explain that what I really needed was a lot more tokens of my own data.

My own data means things I’ve written, talks I’ve given, and videos I’ve made. Asking for triple of what it took me 30 years on the internet to write, and I’m prolific compared to most netizens, is humbling. There just doesn’t exist three times more ‘me’ of data out there.

In short, I learned it’s just guessing words based on patterns of tokens in the data it was trained on and it needs a lot more data to train on. There is some truth to the idea that AIs are ‘word guessing machines’ but at the leading edge they guess as well as almost any expert human would on topics.

If I really wanted to take this further, there are other approaches to improve the result, but in the end they would all pale in comparison to the current frontier models that you can try for free.

There’s a huge value in doing technical things yourself and seeing what is involved. I learned a tremendous amount about the basics of LLM training and what kind of issues would be involved with scaling.

When I worked at NBC, we used the same Nvidia A100 & H200 cards for video editing that are now used for LLM training. They are enormously powerful GPUs. At the time, our competition in buying them was from cryptocurrency groups, not AI companies. The idea that thousands of these cards are needed to train the frontier AI modules shows me the gigantic amount of tokens that are crunched to get today’s AI bots.

Looking at this from a professional point of view, it’s easy to extrapolate from my experiment how a business might want to build its own LLM, trained on a large corpus of knowledge important to that business. It’s probably a spreadsheet of costs comparing doing it yourself with servers, GPUs, and data centers compared to paying an existing AI company to train your data on top of their models. On top of all that, does the cost of a well trained AI system pay for itself in terms of productivity and improvements? The answer on that is still undetermined, despite the current hype cycle.

We are all in the very early days of AI, despite the feeling that it’s taking over our personal worlds and most businesses. My 24-hour experiment only scratched the surface and it’s clear there’s a long way to go before any of us (developers, businesses, or society) truly understand how this technology will reshape our world.

If you are technically minded, do yourself the favor and try training your own model. It won’t end up being very usable, but you will learn a lot.